Site Updates and Migration to Cloudflare Pages

Improvements, Optimisations, and a Better Stack with Cloudflare Hosting

This is my second meta post on site development... and a lot has changed! In this post, I'll walk through some changes on the site, along with my decision-making process on migrating to Cloudflare, and some general tips in case you're going through something similar.

But first things first. Meme.

What's new?

The previous meta update was dated February 4. That time, I revised the site generator, ditching the wavering framework known as Jekyll and moving to Eleventy. This enabled more rapid testing and development of new features, some of which are...

- Frontend

- Cooler UI(?) - Continuous

- Modern Web Elements ⚡️: Alerts, Details, Spoiler - May-September

- Image Lightbox (you can now click on images to expand them) - October

- Link Anchors 🔗 (try hovering your mouse to the left of headings) - October

- Content

- Home Page (uses carousels to cycle through content, so that the page isn't as visually bloated) - May

- Privacy Policy - September

- Assorted Blog Posts (including a series on digital audio synthesis, CTF writeups, and 4 new compositions) - Continuous

- Optimisations

- Lazy Loading (iframes, images, disqus) - May

- Images (responsiveness, etc.) - May

- JS/CSS Minification - September

- Migrate from MathJax to KaTeX - November

- Better Browser Caching for Assets - Now!

- Under the Hood

Migrating Hosting to Cloudflare Pages

The rest of this post deals with more technical aspects. Why did I make these decisions? What low-level improvements are there? What do I look forward to in the future?

While the previous migration dealt with site generation, today's migration is twofold:

- Migrating the hosting service from GitHub Pages to Cloudflare Pages

- Migrating the domain name from

trebledj.github.iototrebledj.me

To the point: why the switch? Plenty of users are content with GitHub Pages. Why am I not? Although perfectly suited for simple static sites, GitHub Pages lacks server-side flexibility and customisations.

Analysis

To be fair, I've debated long and hard between GitHub Pages, Cloudflare Pages, and Netlify. These seem to be the most popular, most mature static site solutions.1

Anyhow, time for a quick comparison:

| GitHub Pages | Cloudflare Pages | Netlify | |

|---|---|---|---|

| Deploy from GitHub Repo | Yes | Yes | Yes |

| Domain Registration2 | No | Yes | Yes |

| Custom Domain | Yes | Yes | Yes |

| Custom Headers | No | Yes | Yes |

| CI/CD | Free (public repos); 2000 action minutes/month (private repos) | 500 builds/month | 300 build minutes/month |

| Server-side Analytics | No | Yes (detailed analytics: $$$) | Yes ($$$) |

| Server-side Redirects3 | No | Yes | Yes |

| Serverless | No | Yes | Yes |

| Plugins | No | No | Yes |

See a more thorough comparison on Bejamas.

Although Netlify and Cloudflare Pages are both strong contenders, I eventually chose Cloudflare Pages for its decent domain name price, analytics, its security-centric view, and a whole swath of other features.

For dynamic sites, Netlify wins hands down. Their plugin ecosystem is quite the gamechanger. But this also comes with the limitation that plugins may also be pricey (e.g. most database plugins come from third-party vendors, which do provide free tiers, albeit limited).

For static sites, Cloudflare Pages is optimal.

Custom Headers: Caching

Custom headers can be used for different things. But one thing that stands out is browser-side caching.

When a browser loads content, it generally caches assets (images, JS, CSS) so that when the user visits another page in your domain, those assets are loaded directly from memory. As a result, network load is reduced and the page loads faster.

The server which delivers the files can tell your browser how long the files should be cached, using the cache-control header.

GitHub Pages tells our browser to only cache for 600 seconds (10 minutes). This applies to all files (both HTML documents and assets). On the other hand, Cloudflare Pages has longer defaults and offers more flexibility, especially for assets.

We can use curl to fetch headers and compare results. At the time of writing:

With Cloudflare Pages, our cache duration is longer (4 hours by default). Thanks to their flexibility, we can customise this further by...

- Changing the max-age through Cloudflare's Browser TTL setting.

- Enabling caching for other files (e.g. JSON search data).

- And more~

High Cache Duration

Having a high cache duration may sound good, but there's one problem. Newer pages/content may not update immediately. The browser will need to wait for the cache to expire before fetching the content.

One way around this is to use cache busting. The idea is to use a different asset filename every time the contents are modified. When the browser sees the new filename, it'll request the file instead of loading it from cache (since it's not in the cache). This way, we can ensure a high cache duration (for assets) with zero "cache lag".

By caching static assets for a longer duration, we speed up subsequent page loads. And as a result, our Lighthouse Performance metric improves! (ceteris paribus)

Sweet Server-Side Spectacles

As you may notice, GitHub Pages sorely lacks server-side customisations, focusing solely on the front-end experience. Let's talk about analytics.

Analytics

Although Cloudflare Pages provides integrated server-side analytics, they are underwhelmingly rudimentary. Detailed analytics (e.g. who visit what page when) are stashed behind a paywall. So in theory, server-side analytics are great! In practice? 🤑🤑🤑. Also: bots.

Server-side analytics differ from client-side analytics, where the former relies on initial HTTP(S) requests to the server, and the latter relies on a JS beacon script. The two approaches differ drastically when we consider the performance impact. With server-side, analytics data is mostly derived from headers in the incoming web request. Usually, the browser type (User-Agent) and referrer (Referrer) are provided by the browser.4 Not as flexible as client-side, but definitely more performant (for the client) and offers more privacy. Moreover, there's the issue of bots polluting the data, such as web crawlers, which usually just fetch HTML and don't load scripts.

With client-side, the browser needs to run a separate script, adding to the network bandwidth. Some scripts are lightweight and simple. Some scripts may fire a bunch of network requests which hinder performance, whilst causing a privacy/compliance nightmare (looking at you Google Analytics).

GitHub Pages currently doesn't plan to support server-side analytics. Cloudflare Pages does, but the free version doesn't offer much 💩🤑. Guess I'll just stick with Cloudflare's privacy-focused client-side solution.

Serverless

Serverless is—lightly put—the hip and modern version of backends.5 Instead of having to deal with servers, load balancing, etc., we can focus on the functionality. Serverless lends itself well to the JAMstack (JavaScript + APIs + Markup) approach to writing web apps.

Use cases include: dynamic websites, backend APIs, web apps, scheduled tasks, business logic, and more.

One reason for moving off GitHub Pages is to prepare for backend needs. I don't have an immediate use for serverless features yet; but if I do write web apps in the future, I wouldn't mind having a platform at the ready.

Domain Stuff

Several reasons why I switched from trebledj.github.io to trebledj.me:

- Decoupling. I'd rather maintain my own apex domain rather than rely on

github.io. - Learning. In the process, I get to touch DNS/networking settings, which are important from a developer and security perspective.

- Personal Reasons. Hosting this website on a custom domain name has been a mini-dream. Migrating to Cloudflare definitely eased the integration process between domain name and site.

Considerations When Choosing a Domain Name

A few tips here.

Choose a top-level domain (TLD) which represents your (personal) brand. A TLD is the last part of your domain name. For example, in

trebledj.xyz, the TLD is.xyz. Generally:.comfor commercial use..orgfor organisations..dev,.codesfor programmers/software-likes.- There are thousands of TLDs to choose from, but I decided to choose

.mebecause I'm not a corporate entity.

Check domain name history for bad/good reputation. It's possible the domain you're after was once a phishing site, but taken down. In any case, it's a good idea to check if the site garnered bad rep in the past as it may impact SEO.

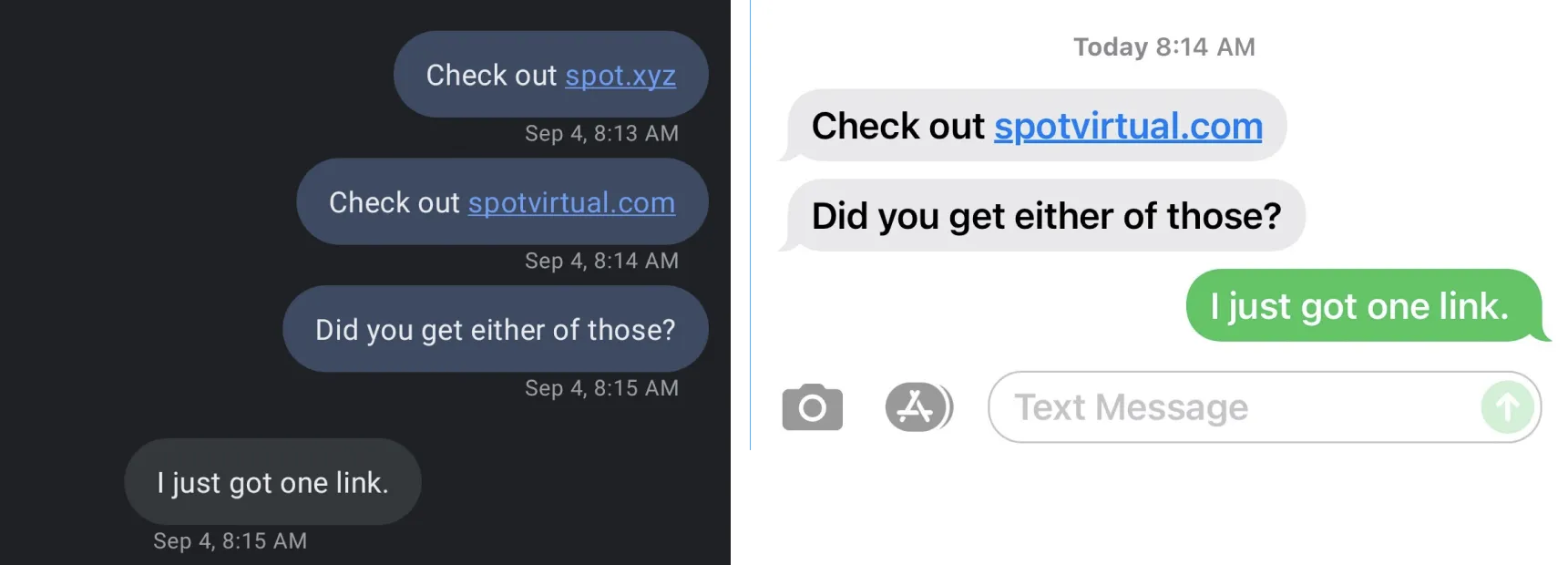

Avoid less reputable domains and TLDs associated with cybercrime.

- .date, .quest, .bid are among TLDs with the highest rate of malicious activity.

- Even TLDs like .xyz—although used by companies like Alphabet Inc. (abc.xyz)—has garnered enough bad reputation.

xyz: an unwise choice

Originally, I wanted to use

trebledj.xyz. I really liked .xyz.Turns out many firewalls block .xyz, rendering the site inaccessible to many. Not only that, but emails or links may be silently dropped. The .xyz domain is just too far gone... firewalls and the general public have lost faith in .xyz. And for this reason, I switched out of .xyz.

Sadly, I wasted 3 bubble teas worth of domain name to learn this valuable lesson. It's a shame that .xyz turned out this way. All those cool xyz websites and domain names out there... blocked by firewalls.

So I've opted for

trebledj.me. I would've gone for .io, but it's thrice the price. At least the folks at domain.me seem committed to security and combatting evil domains. Kudos to them.Choose a domain name provider/registrar. GoDaddy, Domain.com, Namecheap are some well-known ones. They offer discounts, but security features may come with a premium. Cloudflare has security batteries included, but don't come with first-year discounts.

Further Reading:

- Palo Alto: TLD Cybercrime - Comprehensive analysis of malicious sites, if you're into security and statistics.

Closing Thoughts

Overall, I decided to migrate to Cloudflare Pages to improve the site's performance and to future-proof it, by having greater control over the backend.

Some more work still needs to be done, though.

- Setting up 301 redirects from the old site, for convenient redirect and migration of site traffic. (guide; done!)

- Implement longer browser cache TTL + cache busting.

But other than that I'm quite happy with Cloudflare's ease-of-use, despite its day-long downtime (which coincidentally occurred when I was setting up Cloudflare Pages). Setting up the hook to GitHub repos and deploy pipelines were pretty straightforward.

Footnotes

Vercel's also in the back of mind, but after running into login issues, I've decided that Netlify covers most of their features anyway. ↩︎

Note: Domain registration is done through a DNS registrar and usually requires money. (You might be able to get cheap/free deals on NameCheap, sans security features.) Cloudflare and Netlify are DNS registrars. GitHub isn't. This isn't really a big deal. But it's more seamless to deploy your site and set a custom domain on the same service where you register your domain. ↩︎

This isn't really a deal breaker, but included for posterity. ↩︎

Of course, these HTTP headers may be modified, e.g. by using a VPN or a proxy; so these analytics aren't always reliable. ↩︎

Yes, "serverless" is a misnomer; ultimately, there are servers in the background. But the idea is that servers are abstracted away from the programmer. ↩︎

Comments are back! Privacy-focused, without ads, bloatware 🤮, and trackers. Be one of the first to contribute to the discussion — I'd love to hear your thoughts.